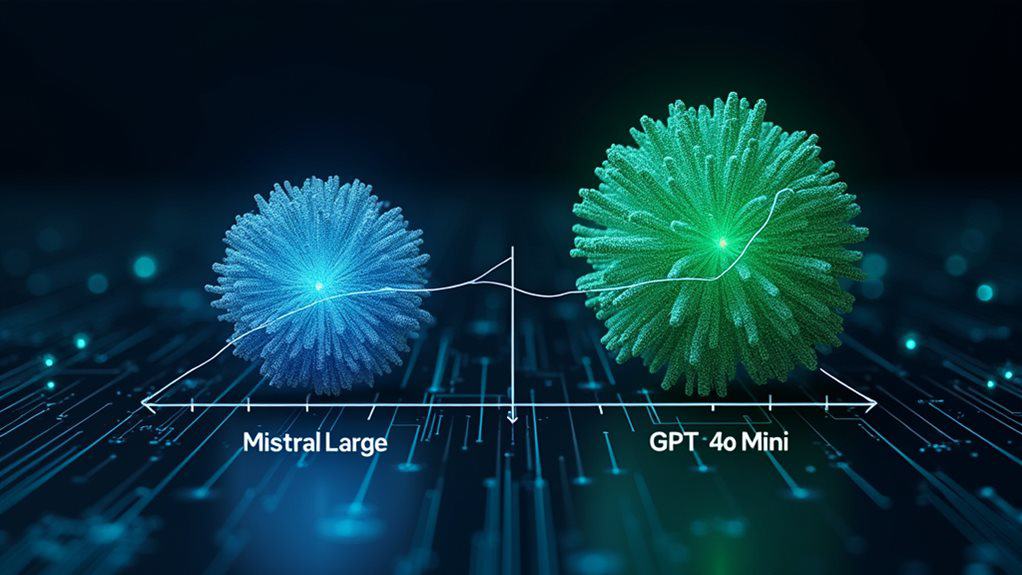

Mistral AI's Large model offers impressive performance compared to OpenAI's GPT-4o Mini despite having fewer parameters. Both models show similar benchmark scores (Mistral: 81.2 vs GPT-4o Mini: 82.0 on MMLU), but Mistral's model is considerably more expensive at $8.00 per million tokens versus GPT-4o Mini's $0.15-$0.60. While Mistral Large is open-source and text-only, GPT-4o Mini handles both text and images. The parameter efficiency suggests innovative architecture advancements worth exploring further.

Despite being released several months earlier, Mistral AI's Large model has demonstrated superior parameter efficiency compared to OpenAI's GPT-4o Mini. This efficiency suggests that Mistral's approach to model design prioritizes doing more with less, potentially changing how we think about AI development.

You'll find that both models represent notable advancements in AI technology, but they excel in different areas. Mistral Large was released in February 2024, while GPT-4o Mini followed in July 2024. The five-month head start gave Mistral an advantage in refining their model architecture.

When comparing performance benchmarks, the competition is remarkably close. GPT-4o Mini scored 82.0 on the MMLU benchmark in a 5-shot scenario, just slightly ahead of Mistral Large's 81.2. However, Mistral Large showed impressive results on the HellaSwag benchmark with 89.2 in a 10-shot scenario.

The cost difference between these models is substantial. GPT-4o Mini costs $0.15 per million tokens for input and $0.60 for output. In contrast, Mistral Large is priced at $8.00 per million tokens for both input and output, making it considerably more expensive. This represents a significant price differential, with Mistral Large being approximately 21.3x more expensive than its competitor.

You'll notice a clear distinction in supported modalities. GPT-4o Mini can process images alongside text, while Mistral Large remains text-only. This improved resistance to jailbreaks gives GPT-4o Mini an additional security advantage in enterprise settings. This limitation might affect Mistral's versatility in certain applications.

The open-source nature of Mistral Large sets it apart from the proprietary GPT-4o Mini. This accessibility could be valuable for researchers and developers who need transparency in their AI systems.

The parameter efficiency of Mistral Large represents an important advancement in AI development. You're witnessing a shift toward models that can achieve comparable performance with fewer resources, potentially reducing computational requirements and environmental impact.

As AI continues to evolve, this competition between Mistral AI and OpenAI pushes both companies to improve their models, ultimately benefiting users who need powerful, efficient language processing capabilities.

Frequently Asked Questions

What Specific Tasks Does Mistral's Model Struggle With?

You'll find Mistral's model struggles with several specific tasks.

It's prone to hallucinations, providing inaccurate information in some cases. The model has vulnerability to prompt injections, where it might ignore your instructions.

Complex reasoning tasks can be challenging compared to larger models. Content moderation presents difficulties, especially with nuanced content.

The model also faces knowledge storage limitations due to its parameter size, restricting how much information it can effectively retain.

How Much Did Mistral AI Invest in Developing This Model?

The exact investment Mistral AI devoted specifically to developing this model isn't publicly disclosed in the available information.

While the company has raised over $1.04 billion in total funding, including €600 million in their June 2024 Series B round, they haven't revealed the precise allocation for individual model development.

You'd need additional financial statements or company disclosures to determine the specific development costs for this particular model.

When Will the Model Be Available for Public Use?

You can access Mistral Large 24.11 now on Vertex AI as part of their Model-as-a-Service offering.

Mistral Large 2 is already available for commercial deployment through IBM Watsonx.

Mistral AI has also released open-source models like Small 3, which you can run locally on suitable devices.

While specific public release dates for newer models aren't explicitly mentioned, the company continues to expand availability across different platforms.

Can Mistral's Technology Be Integrated With Existing Enterprise Systems?

Yes, Mistral AI can be fully integrated with your existing enterprise systems.

You'll benefit from API connections that enable seamless communication between Mistral's models and your applications.

The technology supports on-premise deployment for security compliance and offers customization to meet your specific business needs.

Your enterprise can leverage built-in connectors for PyTorch and TensorRT-LLM, containerized deployment via Docker and Kubernetes, and Azure AD integration for secure authentication.

What Safety Measures Prevent Misuse of Mistral's New Model?

You'll find several safety measures in place to prevent misuse of Mistral's model. These include model guardrails with safety prompts, AI Moderation API with customizable standards, and content categorization to filter harmful content.

Mistral also implements phased deployment to assess risks before full release, conducts red teaming exercises to identify vulnerabilities, and uses detection technology to remove abusive content.

Their approach combines technical safeguards with clear usage policies to protect against potential misuse.